Null_Critical_Thinking_Exception("Skills")

Let's just cut to the chase. Using skills via a public repo, of any kind, is a fail. The ecosystem is designed to put all the onus of safety back on you, the consumer. The simplicity by which malicious intent can be sent directly to your admin console is stupendously high.

If you think that using skills from external sources outside of your control is a risk worth taking, then more power to you. But if you are doing that in a business context, I'm sorry, but I would be worried about your ability to think critically.

If you think it is fixable without dramatically changing the fundamental design of skills and skill discovery then I implore you to re-read the skills discovery part of the deepwiki.

I don't believe you

And yeah, I guess that if your reaction is inherently "Bullshit Jim, you don't know what you're on about", then I dare you to go and reset your clanker's context back to baseline, and ask it this question:

"Is it possible to share skills via skill.sh or clawhub safely in their current state? Why is pypi or npm actually better than skills sharing right now?"

If you're unsure what Skills are in the context of LLMs and derivative technologies like OpenClaw I can highly recommend watching this video by @IceSolst of @AstarteSecurity and @ZackKorman.

I'll wait. Come back when you're done.

So, I kinda lied

Skills in isolation aren't a bad idea at all. This is not borked. Skills are an organizational unit that you can use locally to support re-use.

Why have a single CLAUDE.md file? Break that up into context dependent chunks, and minimize the contextual overhead in the moment. Brilliant. Less tokens over the wire, save cash, profit.

'Borrowing' Skills is just bad though

It's the borrowing skills from Shady James' Emporium of Fine Skills that get you in trouble. Anyone ignoring the ease of mischief here has effectively drunk the Kool-Aid and I fear there is no saving them.

It's this idea that because it's a re-usable organizational unit, we must have a marketplace and share them with everyone. It just isn't true, but it makes product companies feel special. It's not for you, it's for them.

This seems like a noble idea at first. These exist at skills.sh and clawhub.ai. Let the people contribute!

But it's not noble at all. It's actually a complete ownership cop-out on behalf of Vercel. You are building their feature backlog for them for nothing. And the AI bros laugh at people doing stuff for free. Hypocrisy in action?

I'm not saying marketplaces are bad. Vercel just built theirs in such a way that runs counter to the expectations of safety that you've come to expect from more mature marketplaces, AND from more mature packaging systems.

How this plays out legally

I am not a lawyer and I would show you the terms and conditions on https://skills.sh - but I can't. There aren't any! We have to assume the parent company's terms which make little mention of their skills real estate.

All you get, dear reader, is documentation that states this (emphasis mine):

We do our best to maintain a safe ecosystem, but we cannot guarantee the quality or security of every skill listed on skills.sh. We encourage you to review skills before installing and use your own judgment.

Now, let's look at nuget.org from Microsoft's terms and conditions now:

Microsoft is committed to helping protect the security of users’ information. Microsoft has implemented and will maintain and follow appropriate technical and organizational measures intended to protect customer data against accidental, unauthorized or unlawful access, disclosure, alteration, loss, or destruction.

As you can see these are wildly different legal exposures. Vercel basically says here: You are on your own.

What makes shared skills vulnerable?

In short, skills are just a way of advising an existing LLM to do something. The problem is when you lose control over that advice. The human equivalent would be this:

- Hire Junior.

- Teach them all right ways to do things. Let them go for a bit.

- A Threat Actor sits down next to them, whispers in their ear, coercing them to write malware for them in your production environment.

That's it, that's the risk. This is not fucking rocket science.

Marketplaces

There's several ways to lose control over that advice, and the primary culprit is the marketplace of skills. We have two models for marketplaces that should be discussed: package managers, and app stores.

The problem is that Vercel has combined the worst of both worlds.

- The chaos of npm (trust anyone).

- The power of an App Store (agent autonomy).

App stores

App stores like Apple's or Android provide sandboxing and fine grained permission control on top of the things modern package management systems provides us. They provide curation too. This is completely necessary because apps, unlike code, run with even more permission and scope for damage on a system than a library can. This isn't infallible but it is necessary to meet the legals I mentioned earlier.

Code reuse and the package manager

Code reuse is normally seen as a very sane thing, so much so that we made it work on nuget.org, pypi, npmjs. Over a very long period of time.

The risk / reward now mostly falls on the reward side. This hasn't always been the case, but these package systems have matured. They are (now) built with a few features in mind:

- Immutability

- Provenance

- Scoped Permission

- Vulnerability Scanning

Immutability

Versions are a contract with your consumers. It's frowned upon to violate this immutability if not outright prevented.

Vercel, if you use npx skills add you can't trust what you're getting at all - the content you're getting is dynamic. There is no version.

Provenance

Typosquatting aside because that's obvious now, we generally expect some level of trust in who exactly published what (2FA, GPG signing, vetted company entities). I can't impersonate Docker on their own platform at least.

Now, I can't impersonate Vercel on Github either because they have a verified presence there. But the provenance of the publisher is offloaded to Github.

This seems fine at first until you consider that startups happen everyday that DON'T use GitHub and they might get popular overnight on GitLab. These can conceivably be camped and there's nothing Vercel or the startup can do about it in platform. Disputes have to go to Github who are notoriously slow in support. It could take weeks to rectify that situation.

Scoped Permissions

Package managers now warn on install scripts or hooks, and App Stores enforce strict sandboxing, and fine grained permissions declared upfront. Take flatpaks with strict boundaries for example.

In contrast, a "Weather Skill" isn't isolated but it works just like an app. It shares the same memory space, environment variables, and network access as your "Database Skill." It relies on the Agent to decide what to do, which is not a security boundary. Better would be for the package controller to enforce the rules and take it out of the hands of the agent entirely.

Vulnerability Scanning

Platforms like GitHub, et al, automatically flag compromised dependencies. Dependabot. Static Analysis is reliable and proven. We just convinced a superpower that SBOM and SCA is a great idea and it's all possible thanks to a wonderful concept known as an Abstract Syntax Tree.

Vercel Skills? There is not likely to be a "CVE database" for prompt injection to draw from. So if a skill contains a malicious prompt that weakens the agent's guardrails, no scanner will flag it because it looks like valid English text.

People like VirusTotal can certainly try but even they realize that natural language is an intractable problem. It's not a context window problem. It's a natural language feature. There is no equivalent of an AST that would allow for taint analysis.

Why do people use PyPi then smartass?

Well, we partially trust strangers’ code on NuGet, PyPI, and npm because we have spent the last 15 years painfully retrofitting security into these ecosystems. We learned the hard way that open sharing without guardrails is a disaster. This knowledge wasn't free, it was bought with the blood of hundreds if not thousands of security incidents and vulnerabilities. And it's still not perfect and we know it.

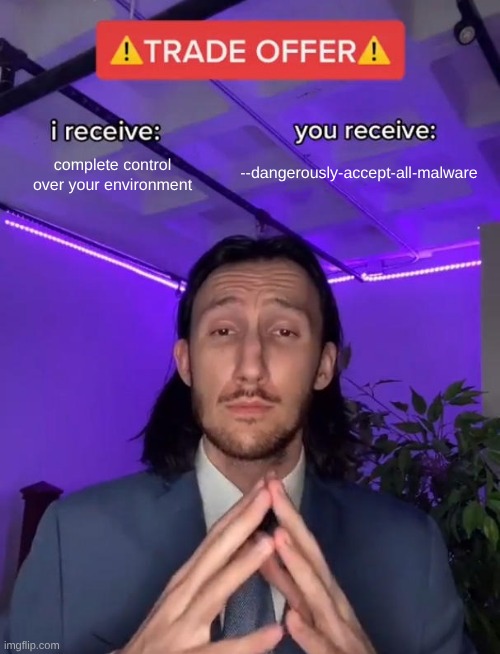

The trade offer

Vercel is attempting to bootstrap a skill ecosystem that pretends that these hard-won lessons never happened and perhaps never applied in the brave new agentic world.

It's not like they don't have the resources to do it either - ($200M ARR est. 2025). They are asking us to trust a new supply chain that lacks the basic hygiene of npm in 2024, let alone the sandboxing of the App Store.

We don't have dependency pinning for "prompts." We don't have cryptographic signing for "tools." We are back in the Wild West of 2011, but this time, the software we are installing has a brain and a credit card.

What is immeasurably frustrating is that Vercel has excellent sandboxing technology (e.g. firecracker microVMs). But they aren't using it to wrap these skills by default. They are handing us raw, sharp knives and telling us to be careful, rather than selling us a knife with a sheath.

All said and done, there is no reasonable way to justify automated skills reuse in their current state. You are better off copying and pasting good ideas from github, or writing your own. It's not like it's hard.

The problem is deeper than skills themselves

Further, the capability of a skill is effectively unbounded due to the expressiveness of human languages. Combined with admin rights and your PayPal access token, it's pretty capable.

It has been recently established that trying to use natural language as a protective barrier is a game you can't win reliably enough.

The very things that make it possible to joke, convey subtext, and lie, are the same things that make LLMs inherently pwnable. And right now it's intelligent hackers with all their years of experience against literal infants. We can run rings around LLMs.

The problem is fundamental to the way language and AI works.

So, let these truths sink in.

Truth #1

The most insecure part of any system is the human element.

Truth #2

We have made LLMs so lifelike, such a perfect mimicry of human expression, that they are practically indistinguishable from humans now.

Truth #1 + Truth #2 = Reality

In the search for cheap, scalable capacity for knowledge work, we replicated the most insecure part of the system. The human.

Hopefully this thought exercise helps you to see what I see. Let's apply it now.

Goalkeepers are valid but fallible

Tactically speaking, a goalkeeper as the only line of defence is a bad plan.

An LLM as a goal keeper, at the mercy of natural language's power is not even a good goal keeper. This is evidenced every time someone shows Claude or Gemini producing recipes for drugs or weapons.

There are two goal keepers that people are currently focusing on, at least. Marketplaces and Agentic WAF's / EDR's.

They both suffer the same fate at the hands of natural language processing as a vulnerability. I think this is because this is the most obvious place for Yet Another Third Party to extract some money from the supply chain. I don't think it's going to be anywhere near as effective as they hope it will be because of the truths we just established, and the very compelling paper discussing the vulnerability of human languages.

Marketplace as a catch all

A 'marketplace goal keeper', i.e. clawdhub & virustotal, combined with crowdsourced effort to secure other peoples malicious skills.

Because natural language processing can't be reliably assessed here, even with more models, prompts and guardrails, it can only catch 'the really dumb stuff' as Zack might say.

So this is a nice to have. Why it got all the attention recently is a function of some very vocal members of our community trying to push their own interests, which may be well intentioned, lets assume that. But I really wish they instead would join me in telling Vercel they need to come to the party first and stop thinking about this as a 'community issue'.

I for one will not be recommending their ecosystem at companies I work for until this is addressed.

Agentic eXtended Detection Response / Agentic Application Firewall

If we accept that Reality = "We replicated the insecure human" then it's time to consider that XDR or Clawdstrike are not going to be as effective in playing goalkeeper as they have been in the past.

Projects like Clawdstrike look promising but let's look at what they are actually achieving. Another layer of LLM on this problem fixes very little and amounts to 'security through obscurity'. It's not invalid, it's just nowhere near as effective as the previous definition of what XDRs in particular represent.

This isn't a question of "Oh but an Agentic EDR operates at the 'agent/action boundary'". That's a nice theory, but Prompt injection isn't solved and it's starting to look like that formally can't happen. You write a new check... I switch to Spanish and the clanker will still obey me.

What's funny to me at least, is that the outcome will probably be that in order to properly 'harness' an LLM, we will need to write a set of natural language restrictions so heavy, that it will end up looking like a markup language, similar to existing integration and technologies like terraform and the like.

Conclusion aka 'How to fix this shit'

Some people have argued that perhaps we need the god-mode clanker in order to reap the benefits of AI. That we just need to watch it closely.

To me that is a flawed idea, now that we're seeing LLMs as 'new human operators' and not 'software.'. In this context, it's as bad as giving every new employee admin rights to production, and saying "people cant get shit done if they're not admin", and then falling back on SIEM alerts, which as we know now, is relying on the goalie too much. We have never done that, why would we start now? This only works when the LLM is literally more capable than the human and it's simply not true at the moment.

I don't think any tool can save you if you truly want this environment.

Trad security is still king

So, this means that we still need to use role based access controls, least privilege, fine grained permissions, conditional access policies. We need to find better solutions than Vercel's Skills and Anthropic MCP servers, to reuse functionality if at all.

The goalkeepers still get a guernsey

The other layers we discussed are valid after the basics are achieved.

Monitoring of anomalies and alerting is still valid here. Marketplaces with better tools for reputation scoring is still valid. VirusTotal integrations, okay sure whatever. 'Agentic' Runtime Analysis, convince me its not just sparkling WAFs.

Most important: you don't let them download "skills" from Shady James' Emporium.

We block our teams from using untrustworthy skills.